Learned Trajectory Embedding for Subspace Clustering

Yaroslava Lochman, Carl Olsson, and Christopher Zach

CVPR 2024

paper · supplemental · poster · slides · SSBA 2024 slides

Abstract

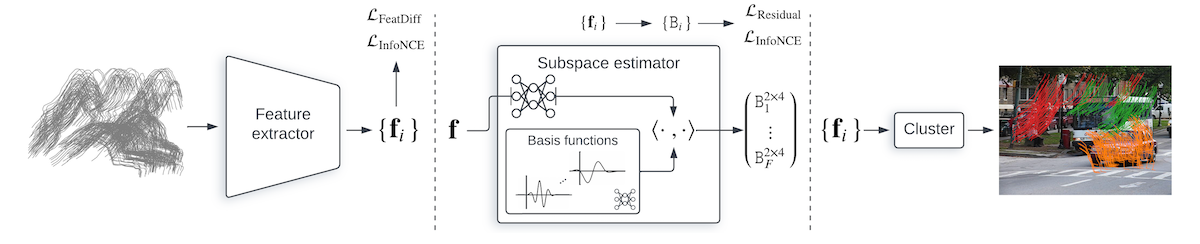

Clustering multiple motions from observed point trajectories is a fundamental task in understanding dynamic scenes. Most motion models require multiple tracks to estimate their parameters, hence identifying clusters when multiple motions are observed is a very challenging task. This is even aggravated for high-dimensional motion models. The starting point of our work is that this high-dimensionality of motion model can actually be leveraged to our advantage as sufficiently long trajectories identify the underlying motion uniquely in practice. Consequently, we propose to learn a mapping from trajectories to embedding vectors that represent the generating motion. The obtained trajectory embeddings are useful for clustering multiple observed motions, but are also trained to contain sufficient information to recover the parameters of the underlying motion by utilizing a geometric loss. We therefore are able to use only weak supervision from given motion segmentation to train this mapping. The entire algorithm consisting of trajectory embedding, clustering and motion parameter estimation is highly efficient. We conduct experiments on the Hopkins155, Hopkins12 and KT3DMoSeg datasets and show state-of-the-art performance of our proposed method for trajectory-based motion segmentation on full sequences and its competitiveness on the occluded sequences.

BibTeX

@InProceedings{lochman2024learned,

author = {Lochman, Yaroslava and Olsson, Carl and Zach, Christopher},

title = {Learned Trajectory Embedding for Subspace Clustering},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2024},

pages = {19092-19102}

}